What Is a Good ROAS Benchmark for Facebook Ads?

If you run mobile app campaigns, you know the Facebook ROAS benchmark figures are all over the internet; some say 4×, others 2×. However, in 2025, Meta’s median sits closer to ~2.8×, and for apps, the early ROAS can even fall below 1×, depending on the model. For instance, Mintegral’s 2025 case study on the personalization app Zedge found that Day‑0 ROAS was just 0.40× on iOS and 0.60× on Android, illustrating how early returns can be far below break‑even.

The problem is that ad costs and tracking rules have shifted. CPMs increased across Meta in 2025, leading to higher acquisition costs. At the same time, Apple’s SKAdNetwork updates and Meta’s push for the Conversions API mean that a D1 ROAS can appear misleading, either too good or too bad, compared to its true long-term value.

That’s why you need clear benchmarks and the right time window (D1, D7, D30, or LTV) to judge campaigns.

In this blog, we'll cover 2025 Facebook Ads ROAS benchmarks, attribution windows, break-even math, and the reporting setup that keeps your team from chasing misleading signals.

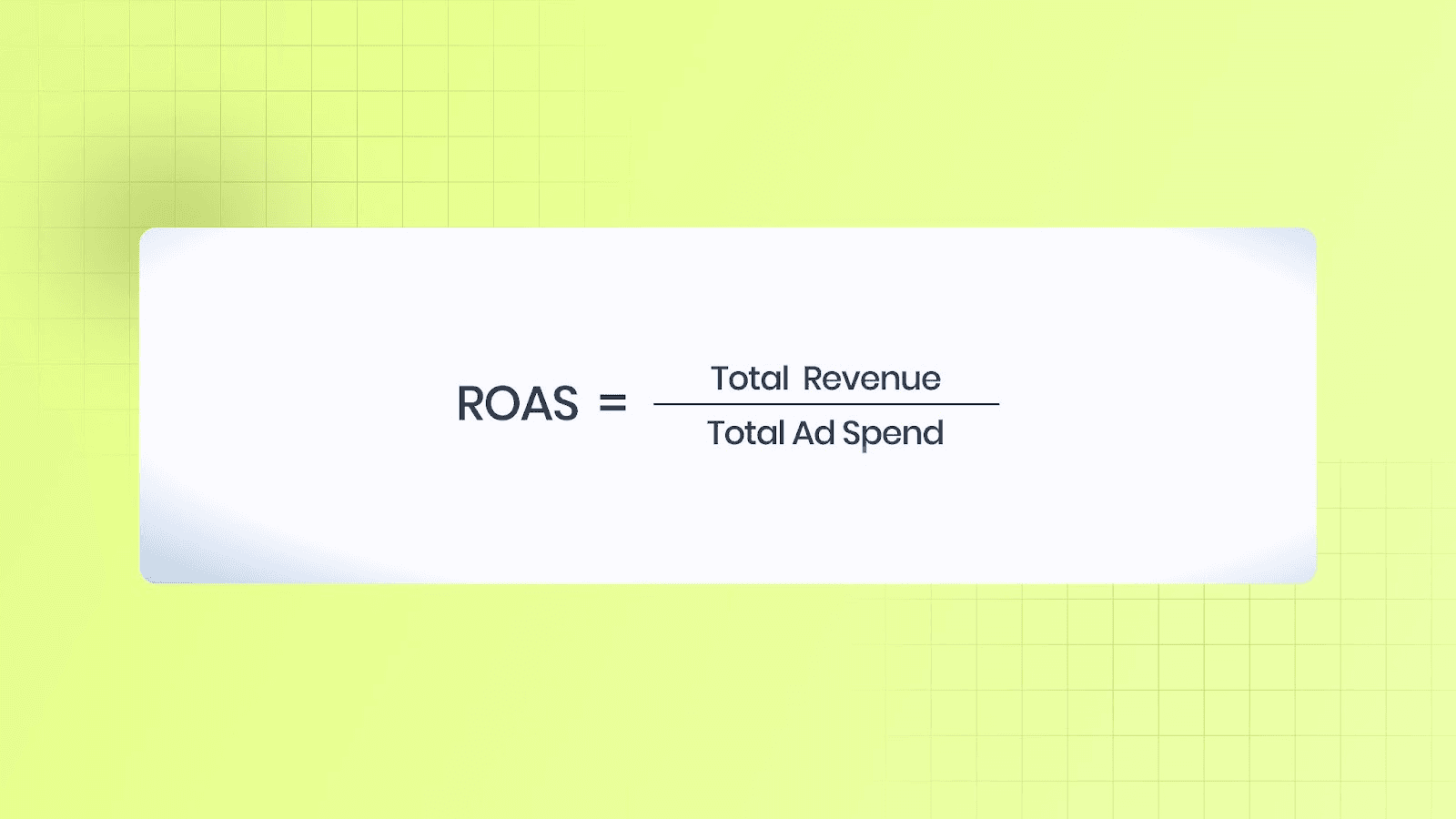

What Does ROAS Mean?

ROAS means Return On Ad Spend. It shows how much revenue you make for every dollar spent on ads.

Example: $1,000 revenue ÷ $200 ad spend = 5.0 ROAS (or 5:1).

This number is quick to read, but it only shows direct revenue, not profit or long-term value.

ROAS Compared to CPA, LTV, and ROI: Which KPI to Use

You should use ROAS for revenue efficiency, CPA for acquisition cost control, LTV to set acquisition caps, and ROI to check net profit. Assign one primary KPI per campaign and stop spending on campaigns that miss that KPI.

Why Use D1, D7, and D30 Attribution Windows?

Revenue doesn’t always appear on day one. Examining multiple time windows reveals how value is built.

D1 (Day 1): Immediate revenue. Suitable for testing creatives fast.

D7 (Day 7): Early repeat buys or in-app spend. Predicts retention quality.

D30 (Day 30): Long-term value, such as subscriptions or repeat orders.

Why This Matters: Attribution delays, platform reporting, and user behavior can cause a single-day ROAS to both underestimate and overestimate the true value. Mobile measurement vendors recommend cohorting and using D1/D7/D30 to create an accurate picture for scaling decisions.

This is the reason you should report ROAS across at least D1, D7, and D30 for a reliable view of campaign performance and to avoid misleading quick wins.

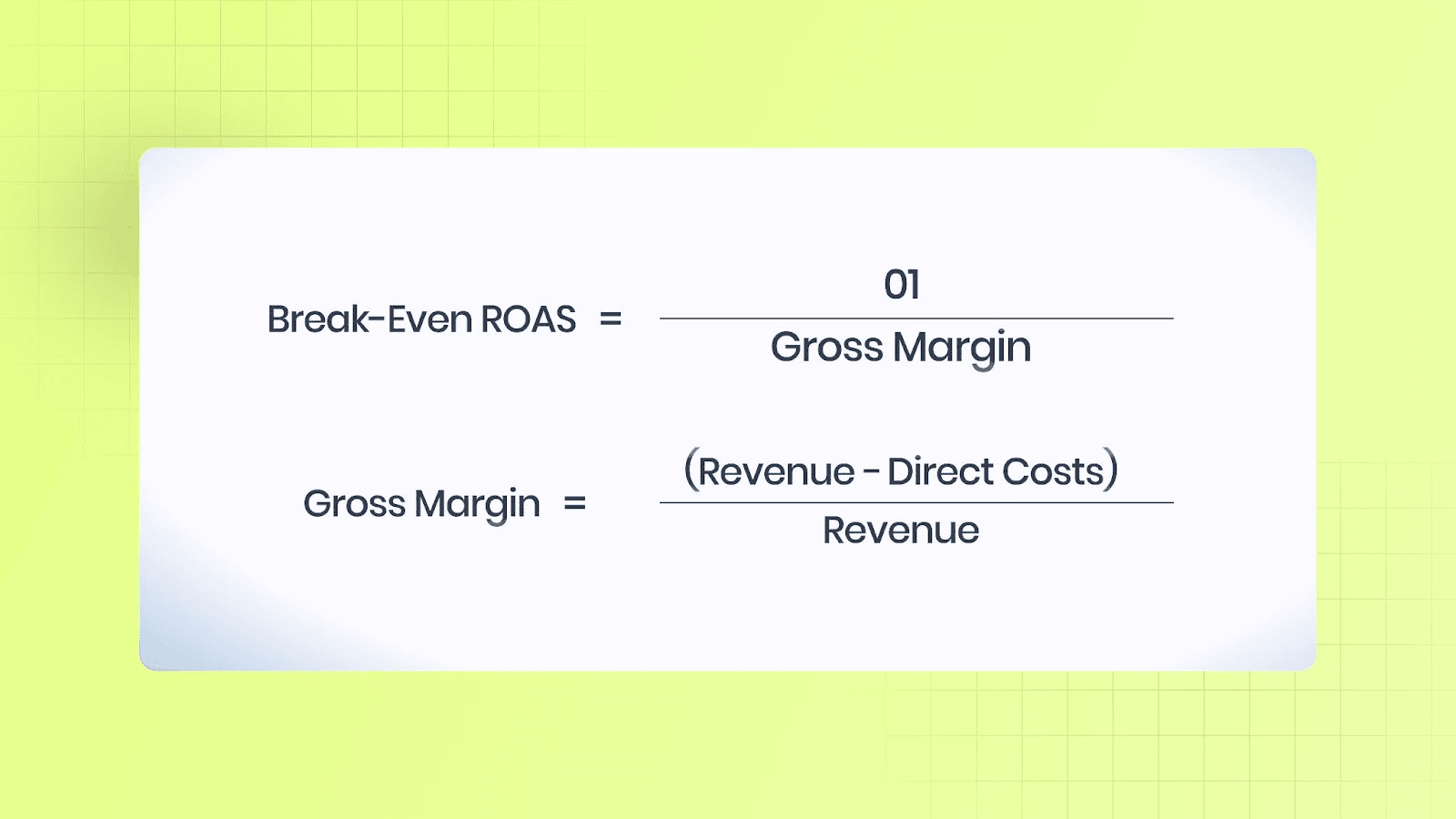

Break-Even ROAS Formula and Logic

Break-even ROAS is the point where ads neither make nor lose money.

If actual ROAS is above this number, you’re profitable; if it’s below, you’re losing money.

Examples:

DTC Product Example:

AOV = $50

Direct costs (product + shipping + fees) = $20

Margin = 60%

Break-Even ROAS = 1 ÷ 0.60 = 1.67

In-App Purchase Example:

ARPU (30 days) = $4.00

Platform fees (App Store / Play Store) = 30% → margin = 70%

Break-Even ROAS = 1 ÷ 0.70 = 1.43

Check each against your D7 or D30 ROAS to decide if spending is safe to scale.

Inputs Checklist For Accurate ROAS Math

Use net revenue (after discounts and refunds).

Include COGS, shipping, fulfillment, and fees.

Subtract platform cuts for apps (App Store / Play Store).

Match currency and time windows between the ad platform and the finance department.

Use cohort ARPU/AOV for the same D1, D7, and D30 window.

Clean inputs stop you from overestimating campaign success. Use ROAS for quick campaign checks, but always compare it to break-even and LTV. For sharper testing, focus on D1 and D7; for scaling, use D30.

A good way to ground all of this in reality is by looking at where the market stands today. Benchmarks give you a reference point to separate strong performance from wishful thinking; and help you decide when it’s time to optimize versus scale.

What Is a Benchmark ROAS on Facebook Ads (2025)?

Mobile app marketers and creative ad teams require precise, up-to-date ROAS benchmarks to establish targets and assess campaign performance. The sections below provide 2025 platform and industry medians, short explanations of why the numbers sit where they do, and compact takeaways that can be applied immediately:

1. Platform Snapshot: Overall Median (2025)

Median ROAS range: ~2.5×–3.0× (platform median ≈ 2.79×).

This is a platform-level snapshot for 2025 that pools many verticals and campaign mixes.

Why this matters: the platform median is a starting reference only. Seasonal spikes and campaign mix (prospecting vs. retargeting) shift the median. Use it to understand general platform efficiency, then layer in industry-specific details.

Takeaway: treat ~2.8× as a baseline. Compare campaign performance to this baseline only after segmenting by vertical and campaign type.

2. DTC / E-commerce: Broad View (2025)

Typical median ROAS range: ~2.5×–5×

Why this range occurs: DTC outcomes depend on gross margin, average order value, the split between subscription and one-time purchases, and the allocation between prospecting and retargeting spend. High-margin brands can be profitable at lower ROAS; low-margin brands need higher ROAS.

Practical note: measure prospecting and retargeting separately. Retargeting cohorts typically show higher ROAS than median totals.

Takeaway: Compute breakeven ROAS from margins first. Then evaluate where the brand sits in the 2.5×–5× band.

3. Apparel & Fashion (DTC subcategory)

Typical median ROAS range: ~2×–3×

Why lower: Strong competition, frequent promotions, and seasonality often compress margins and ROAS.

Actionable move: focus on conversion lifts and raising average order value (bundles, cross-sell), which tend to improve ROAS more reliably than cutting media spend.

Takeaway: aim for the higher end of the 2×–3× range by improving conversion rate and AOV.

4. Beauty & Personal Care (DTC subcategory)

Typical median ROAS range: ~1.3×–2.5×

Why higher: repeat purchases and subscription/refill models lift long-term median ROAS versus one-time products.

Measurement tip: Evaluate ROAS over longer LTV windows when subscriptions or refill behavior is present.

Takeaway: Use cohort LTV (Day-30/90) to set acquisition targets for refill and subscription offers.

5. Subscription DTC (Boxes and Consumables)

Observed pattern: initial ROAS is often low (≈ 1×–2×), then improves when LTV accrues.

Why: acquisition cost is amortized across recurring payments; first-month ROAS can understate lifetime value.

Takeaway: set targets using Day-30/90 LTV projections, not only first-month ROAS.

6. Mobile Apps: Non-Gaming (Finance, Dating, Utilities)

Typical median ROAS range: ~0.3×–2× (wide spread). April 2025 median for mobile apps was around 0.5× in several benchmark pulls.

Why is there a wide variation? This is due to the monetization model (subscription, one-time purchase, or ad revenue), platform differences (iOS vs. Android), and the variation in CAC push across app types.

Measurement guidance: pair acquisition ROAS with cohort LTV (Day-7/30/90) and CAC to assess economics.

Takeaway: short-term ROAS alone can be misleading for apps; cohort LTV is essential.

7. Mobile Games: By Monetization and Genre (2025)

Reported medians for gaming can look healthy at the platform level, but subgenres differ sharply.

Representative genre ranges:

Hypercasual: ~0.2× to 1.2× early ROAS (typically well below break-even on Android; top iOS titles and the best creatives can approach or exceed 1× at D30). Ad-first hypercasual games rely on very high retention and regional eCPMs to drive profitability.

Casual / Puzzle: ~0.4× to 1.8× early ROAS. Casual titles exhibit the widest variation by platform; top IAP-driven casual titles can surpass 1× by D30 and significantly increase over their lifetime. Liftoff’s 2025 casual report shows a D30 ROAS of ~47% on iOS versus ~15% on Android, which serves as a helpful reference.

Midcore / Hardcore: ~1.5×–6× lifetime ROAS (or higher for best iOS geos and strong IAP titles). Midcore titles with strong IAP funnels and good retention consistently outperform ad-first genres, especially in high-value regions; lifetime returns heavily depend on genre and location.

Why eCPM Matters: Ad revenue per install (eCPM), retention curves (D1/D7/D30), and IAP conversion rates collectively determine the break-even ROAS for ad-first and hybrid titles. Small changes in geo mix or rewarded video eCPM can often shift a title from loss to profit.

Takeaway: Evaluate CPI, Day-7/30 LTV, and eCPM together; genre and geo mix usually shift profitability more than headline ROAS.

8. SaaS / B2B / Lead Generation: Reframe ROAS

How to think about it: ROAS alone rarely tells the whole story for lead funnels. Translate cost per lead into qualified leads, then into recurring revenue to derive an effective ROAS.

Practical workflow: build a lead→SQL→revenue model and convert CPL into target ROAS based on expected MRR/ARR contribution.

Takeaway: prioritize pipeline metrics early; use revenue mapping to turn CPL into a meaningful ROAS target.

These 2025 benchmarks provide a clear starting point: use the platform median (~2.79×) for context, then set tighter targets based on vertical, monetization model, and cohort LTV. Exact targets should be derived from breakeven calculations and the app’s Day-30/90 LTV outlook.

Also Read: CPI, IPM, and ROAS Benchmarks for Optimizing Ad Spend

Understanding ROAS benchmarks is only half the picture; cost dynamics complete the story. Even the strongest creative or targeting can underperform if CPMs climb faster than your monetization can support.

CPM and Cost Trends on Meta in 2025: Impact on Target ROAS

Meta CPMs and general ad costs rose in 2025. Multiple industry trackers show that Meta (Facebook and Instagram) median CPMs are often in the mid-to-high single digits and sometimes higher in certain quarters or verticals.

Higher CPM directly increases the ad spend required to acquire a user, so target ROAS must be set based on current CPM/CPI figures rather than fixed multipliers. Use live CPM/CPI benchmarks for planning.

Practical math to align targets with costs:

Start with the current CPI (cost per install) or CPM from recent campaign data or a trusted tracker. For example, if CPI = $2 and 90-day LTV = $6, long-run ROAS = 6 ÷ 2 = 3x.

If CPM or competition pushes CPI to $3, the same LTV yields a ROAS of 6 ÷ 3 = 2x.

This simple step illustrates why rising CPMs necessitate increasing LTV, reducing CPI through stronger creatives, or accepting a lower short-term ROAS while optimizing for the longer term.

Placement and format shifts matter. Reels and short-form placements are drawing big budgets and shifting price pressure across inventory. Costs vary by placement, industry, and targeting; benchmarks differ by vertical. Use placement-level CPM and CPI to set creative tests and to choose where to scale spend.

Measurement Changes to Watch

Recent privacy updates have impacted how ROAS is reported. Apple’s SKAdNetwork now supports multiple postbacks across conversion windows, providing cohort-style signals instead of instant per-install revenue. As a result, last-click, day-1 ROAS can be misleading. Plan for multi-postback windows of several weeks when mapping ad signals to revenue.

Meta’s Aggregated Event Measurement limits client-side event data. The Conversions API (server-side events) is crucial for restoring signal and enhancing event matching for opted-out users. Ensure server events are sent, domains are verified, and event coverage is high to improve Meta’s optimization. Use both server events and pixels for signal deduplication.

How to report ROAS reliably today:

Treat SKAN postbacks and aggregated events as cohort signals. Map SKAN conversion values to small revenue or engagement cohorts so that postbacks serve as LTV proxies rather than exact per-user revenue.

Send server-side events through the Conversions API and track an event coverage ratio (aim high) to reduce blind spots in optimization.

Build dashboards that compare modeled/platform ROAS (SKAN + AEM + CAPI) against internal revenue systems on weekly cohorts. Expect lag, noise, and sampling differences; measure against 30–90 day LTV windows where possible.

Why LTV must anchor decisions now: Single-day ROAS under privacy-limited measurement often understates an ad’s long-term revenue, especially for apps that monetize over weeks or months. Comparing day-1 ROAS to acquisition cost may hinder scaling of creatives that produce higher lifetime returns. Use LTV windows (30, 60, 90 days) as the primary profitability metric.

Set ROAS targets based on the real cost environment, combine SKAN, aggregated events, and the Conversions API into cohort ROAS, and let LTV windows guide spend and creative scaling.

Also Read: Implement and Optimize Facebook Ad Tools for 2025

Once the tools are in place, the next challenge is timing. Attribution windows determine how you read performance, and choosing the wrong one can skew your entire strategy.

Which ROAS Window to Use (D1 / D7 / D30 / LTV)

Mobile app marketers and creative teams must select an attribution window that aligns with the product’s buying cycle and monetization timeline. The incorrect window results in a false pass or a false fail. Below are clear, practical rules for choosing short (D1/D7) versus long (D30 / LTV) windows and what to watch for when reporting:

1. Short Windows: When D1 or D7 Is the Right Read

Use D1 or D7 when the app’s revenue occurs very quickly after install, and the goal is fast creative or funnel validation. These windows are helpful for creative A/B testing, early stop/go decisions on creative, and quickly identifying broken flows. For many creative tests, a D7 ROAS offers a reliable early indicator, removing the need to wait a full month. Tools used by UA teams report D1/D7 ROAS as standard cohort outputs to accelerate creative cycles.

When to prefer D1/D7:

Short onboarding flows that convert within a few sessions.

Campaigns that require quick iteration on ads or creatives.

Early-stage soft-launch tests where signal speed matters more than complete LTV.

2. Longer Windows: When to Use D30 and LTV (Day-30/Day-90)

Select D30 or Day-90 LTV when meaningful revenue is generated later, or when subscriptions, repeat purchases, or ad monetization drive value over time. For subscription apps and many DTC flows, revenue commonly builds over weeks; Day-30 and Day-90 ARPU/LTV are the standard anchors for profitability and scale decisions. Industry measurement guides and monetization reports indicate that Day-30/Day-90 are core reporting windows for reliable ROI work.

When to prefer D30/LTV:

Subscription products where trial-to-paid conversions and renewals matter.

Games with IAP or ad-eCPM that generate substantial revenue after the initial install.

DTC flows are associated with repeat purchase behavior that often occurs within 30–90 days.

Recommendations by product type (simple rule set)

Mobile games: favor D7 and D30 for ROAS and LTV checks. Use D7 to detect early monetizers and D30 to validate payback and ad revenue curves before scaling. Use genre-specific targets (hypercasual vs. midcore) when interpreting those windows.

DTC / e-commerce apps: measure purchases across 30–90 days. Short windows can undercount returning buyers and promo-driven repeat orders. Set acquisition targets against 30–90 day purchase rates tied to margin math.

Subscription and finance apps: anchor decisions to Day-90 (or longer) ARPU/LTV. Early ROAS may appear small, but lifetime margins and retention ultimately determine the true unit economics.

What to Report (So Teams Don’t Chase the Wrong Signal)

Report the minimum ROAS at D1, D7, and D30, together with a Day-90 or Day-lifetime LTV, where possible. Label which figure is primary for each campaign (fast-test vs. scale campaign). Treat privacy-limited signals (SKAdNetwork / Aggregated Event Measurement) as cohort proxies and map them into the same windows in dashboards, ensuring comparisons are apples-to-apples.

Pick the shortest window that gives a reliable signal for the decision at hand, and always validate that signal against a longer LTV window before allocating large-scale budgets.

Now that the fundamentals are in place, what follows are the practical levers you can adjust to directly influence ROAS.

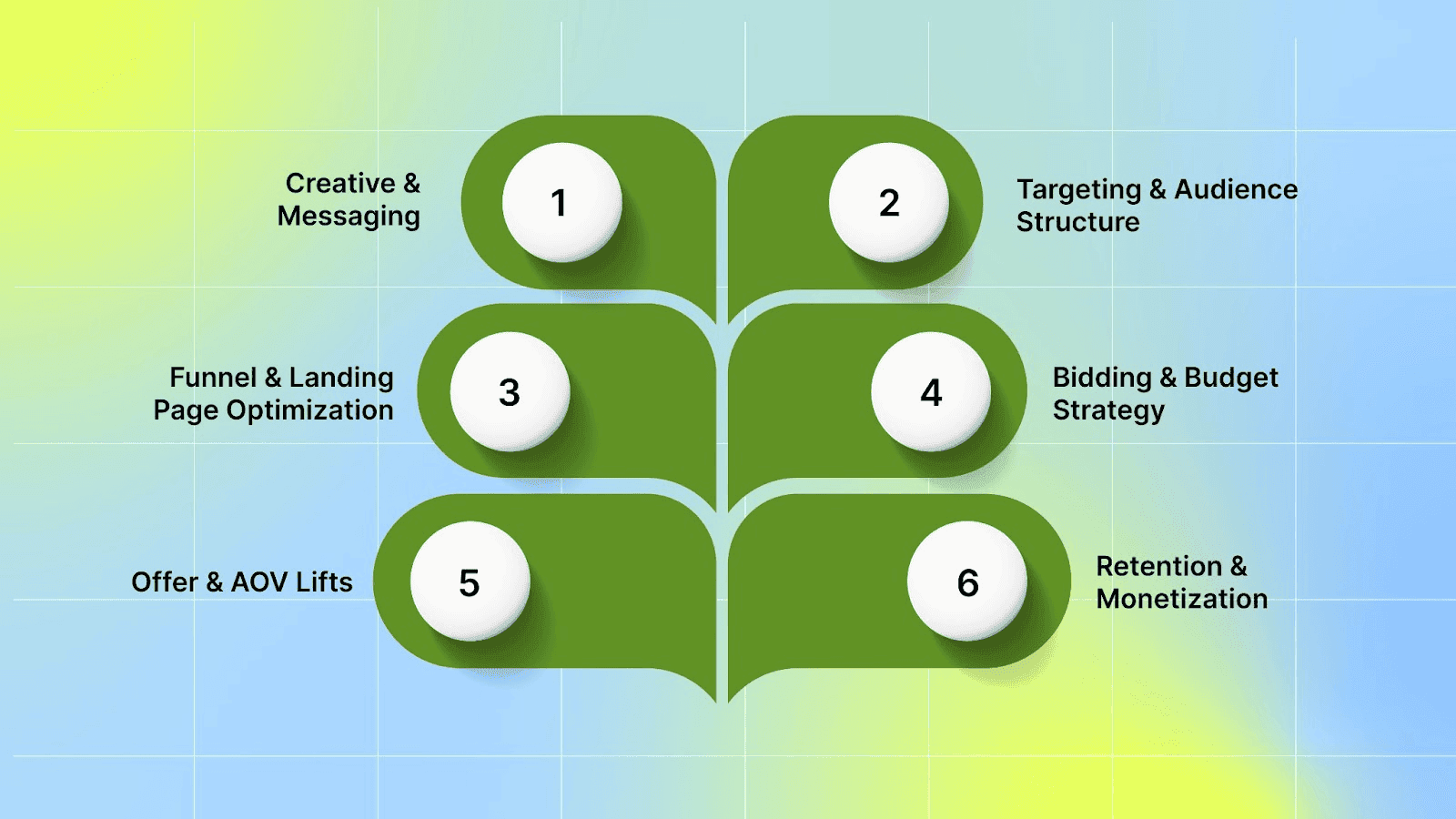

Tactics to Improve ROAS on Meta

Mobile app marketers and creative teams can boost ROAS by refining creatives, refining audience targeting, addressing funnel leaks, and leveraging value-aware bidding. The items below list practical moves and the signals to measure.

1. Creative & Messaging:

Creative usually moves the needle first. Conduct focused tests comparing short videos, UGC-style clips, and dynamic product formats to identify what effectively engages users. Video often outperforms static when it shows the product or gameplay quickly and includes readable captions for silent autoplay. Use dynamic product ads where catalog items are relevant, and UGC when social proof enhances conversion.

Tactics:

Build an experiment matrix that swaps one element at a time: hook (first 2–3 seconds), angle (benefit vs. problem), and CTA.

Use short formats (6–15s) for reels and longer demo clips for feeds.

Repurpose top-performing creatives into carousel and dynamic templates.

Prioritize creatives that show early engagement lifts, then validate those creative winners in wider cohorts before scaling.

2. Targeting & Audience Structure:

Use lookalikes seeded from high-value users or paying cohorts and apply layered exclusions to avoid low-value segments. This focus is spent on users who resemble customers who already pay.

Tactics:

Create lookalikes from a Day-30 or Day-90 payer cohort rather than raw installs.

Layer interests or behaviors only after testing broad automated targeting; then narrow them with exclusions (such as low-value countries, uninstallers, and one-time coupon hunters).

Maintain a separate retargeting audience for users who have already engaged or installed your app.

Use high-value seeds for lookalikes and exclude known low-value groups to ensure clearer ROAS signals.

3. Funnel & Landing Page Optimization:

Conversion rate improvements multiply ROAS because the same ad cost yields more revenue. Target landing pages and post-install flows that match the ad promise and remove friction points (load speed, confusing CTAs, long forms). Industry benchmarks help set realistic goals: platform medians sit around mid-single digits for landing pages, while top pages reach double digits.

Tactics:

Align creative messaging and landing page hero copy so users see the same offer and benefit.

Aim to raise the landing page conversion rate by small, testable increments (e.g., from 3% to 5%). Use A/B tests that change one element at a time.

For apps, optimize onboarding steps so first-session key events happen quickly (account creation, tutorial skip options).

Even a modest CVR gain reduces CAC and raises ROAS. Quickly treat landing pages as a performance lever, not an afterthought.

4. Bidding & Budget Strategy:

Use LTV or value-based bidding where possible. For apps, shift budget into value optimization and ensure Conversions API is sending server events to stabilize learning and improve event match quality. Game UA guides recommend LTV-aware bidding when scaling.

Tactics:

Enable value optimization (Meta’s value bidding) for campaigns where per-user revenue is modeled. Send accurate revenue values via CAPI/pixel.

For games, utilize LTV predictions and portfolio LTV strategies to allocate spending across titles or geographic regions. Monitor eCPM and CPI together.

Begin with conservative budgets during algorithm learning, then scale incrementally once the D7/D30 ROAS meets the break-even rules.

Value bidding, combined with strong server-side event signals, provides a more stable ROAS under privacy constraints.

5. Offer & AOV Lifts:

Lead: Raising average order value lowers the required ROAS for profitability. Simple moves, such as bundles, cross-selling, and limited-time offers, increase AOV and make scaling easier.

Tactics:

Test bundling common items at a small discount and present the bundle in the checkout flow.

Offer a subscription or replenishment option for consumables to convert one-time buyers into recurring customers.

For games, consider bundling IAPs or special starter packs to increase first-purchase size.

Increasing AOV directly improves economics and reduces the ROAS barrier needed to scale.

6. Retention & Monetization:

Retention is the long-term lever for ROAS. For apps, retention improvements increase Day-30/90 LTV, which in turn raises sustainable ROAS. For games, combine ad eCPM and IAP improvements when valuing cohorts. Industry trackers show ad eCPM and IAP mix matter by genre and geo.

Tactics:

Run ASO and onboarding tests to lift Day-1 and Day-7 retention.

Measure ad revenue eCPM separately and optimize ad placements to avoid hurting retention.

Use retention cohorts to predict Day-30/90 LTV before committing large budgets.

Stronger retention increases LTV and makes higher-cost acquisition channels profitable.

Also Read: Creating a Converting Facebook Ad: Practices and Examples

Reporting, Cohorts, and Experiments

Good reporting turns noisy signals into decisions. Build dashboards that compare cohorts by the same windows and run ordered experiments so outcomes are easy to interpret.

How to Build a ROAS Dashboard

The dashboard must display the same cohort windows used for bidding and finance so that teams can compare them apples to apples.

Required metrics:

ROAS by cohort (D1, D7, and D30 at minimum).

LTV: CAC ratio for selected windows (Day 30 or Day 90).

Retention curves (Day-1, Day-7, Day-30).

Event coverage and match quality (pixel vs. CAPI) to flag measurement gaps.

Use cohort charts that allow teams to track how a creative or audience performs from Day 1 through Day 30 before a full budget increase.

A/B Tests to Run First

Run tests in a priority order that reduces confounding factors.

Priority tests:

Creative (hook/angle): single change at a time.

Audience (broad vs. lookalike seeded from payers).

Bid type (cost cap vs. value bidding) with identical creatives.

Landing/onboarding flow variants.

Run each test long enough to reach statistical power for revenue outcomes, not just clicks.

When to Pause vs. Scale (Rule Set)

Use rules tied to break-even and marginal returns.

Rules:

Pause if D7 or D30 ROAS falls below break-even ROAS for the target cohort after accounting for fees and COGS.

Scale if D7 ROAS meets a safe threshold and D30 trends confirm economics, or if the predicted LTV: CAC ratio is favorable.

Apply marginal ROAS rules: increase spending only if the incremental ROAS of the added budget remains above the break-even point.

Make scaling rules explicit on the dashboard to ensure consistent decisions across teams.

Common Pitfalls & How to Avoid Them

These traps cause wasted ad spend. Each item below includes a short avoidance step.

Chasing single-day ROAS without LTV: Don’t let D1 wins alone drive scale; compare D1/D7 to D30/LTV before major budget moves. Avoidance: Require D7 and D30 checks for scale decisions.

Comparing cross-channel numbers without consistent windows is challenging because different platforms report with different windows and attribution logic. Avoidance: Normalize to the same cohort windows and currency before comparing.

Ignoring attribution delays and measurement changes: SKAdNetwork and aggregated reporting add delays and noise. Avoidance: Treat privacy-limited signals as cohort proxies, utilize CAPI and a pixel to enhance coverage, and map SKAN values into cohort LTV where feasible.

Plan reports and experiments so they account for measurement lag and reflect the true economics of the app or product.

Also Read: Understanding Meta's Advanced Mobile Measurement Terms: A Guide for Advertisers

Conclusion

Judge ads by the revenue window that matches your product, depending on cohort LTV instead of single-day wins. Keep your revenue inputs clean so ROAS math reflects true economics. Make creative and funnel tests the first levers you pull before cutting media spend.

Implement simple rules in your dashboard to enable teams to pause or scale campaigns using the same windows and break-even logic. Additionally, run ordered experiments that change only one variable at a time, making the results easy to interpret.

Segwise maps directly onto these needs. It's a creative analytics platform that automatically tags creative elements and links those tags to performance, allowing you to identify which creative hooks, scenes, or formats yield the strongest cohort returns without manual labeling. Segwise also provides tag-level reporting, experiment tracking, and automated alerts to surface break-even signals.

Start a 14-day free trial to see the workflow in your accounts.

FAQs

1. Should you include organic lift when you report ROAS?

Keep paid-only ROAS separate from organic uplift. Run incrementality or lift tests to quantify the incremental revenue ads produce, and report paid ROAS, along with a separate uplift number for clarity.

2. How do you measure ROAS when ads drive in-store or offline sales?

Send offline transactions into your ad platform’s offline-events dataset using the Conversions API (or upload matched offline events via your ad account). If that’s not possible, use unique promo codes or matched receipts to link in-store purchases to campaigns. Note: Meta’s dedicated Offline Conversions API was deprecated; the Conversions API / offline datasets are now the supported path.

3. Can you use ROAS to judge brand-awareness campaigns?

No, use brand-lift surveys and reach/recall metrics for brand work. Treat revenue-based ROAS as a longer-term outcome metric rather than the primary short-term success measure.

4. How often should your team update ROAS targets?

Monitor active campaigns weekly for signs of drift, and run a formal review monthly or after major market events. Use the weekly checks to spot anomalies and the monthly review to change targets or tests.

5. What data volume do you need before trusting ROAS test results?

Calculate the required sample size for each test using a power/sample-size tool and act only after you hit that minimum. As a rule of thumb, many revenue tests need on the order of hundreds of conversions per variant, but run the calculator against your baseline conversion rate and minimum detectable effect to be sure. Link your team to a sample-size calculator before starting.

Comments

Your comment has been submitted