The Creative Testing Roadmap: Strategies to Drive High-Impact Ad Campaigns

Are you shipping more ad creatives every week but still unsure which ones actually deserve more budget?

When creative decisions aren’t tied to clear learning, every new launch feels like another risky bet instead of a confident move. Without a structured approach, creative testing turns into guesswork, ROAS becomes unpredictable, and UA decisions feel inconsistent rather than repeatable.

This blog walks you through proven strategies and a practical ad creative testing roadmap built for user acquisition teams. You’ll learn how to apply strategic testing methods that help you scale winning creatives, reduce fatigue, and drive results across mobile games, DTC paid video, subscription apps, and growth agencies.

What is Creative Testing?

Creative testing is a structured, data-driven approach to evaluating ad creatives to understand which creative elements drive user acquisition performance. Instead of judging ads as “good” or “bad,” you test specific variables like messaging, visuals, formats, and CTAs to see what impacts performance.

For UA teams, creative testing helps you identify which creative decisions lead to better ROAS, lower CPA, and higher-quality users across networks. It turns creative optimization into a learning system that shows you what to test, iterate, or stop.

Once you understand what creative testing is, the next step is to know which elements you should test.

Which Creative Elements Should You Test?

Every element plays a role in how it performs, how you can improve results, or break what was working. Instead of testing “more ads,” you should test specific creative elements that influence attention, intent, and conversion quality across networks, so you don’t rely on trial and error.

Here are the key creative elements you should test:

1. Visuals

Visuals decide whether someone stops scrolling or skips your ad entirely.

Images, illustrations, or product shots.

Static vs. motion assets.

Color schemes and design styles.

Video intros, opening hooks, and endings.

For mobile games and paid video, small visual changes can significantly impact engagement and install quality.

2. Copy

Copy shapes how clearly your value is understood in seconds.

Headlines, subheads, and body text.

Tone of voice (direct, playful, urgent, informative).

Benefit-led messaging vs. problem-led messaging.

Testing copy helps you learn which messages attract users who convert and stay.

3. Calls to action (CTAs)

CTAs influence whether interest turns into action.

Button text (“Buy now” vs. “Learn more”).

Placement, size, and color.

Static vs. animated or interactive CTAs.

In UA campaigns, even small CTA changes can shift conversion rates at scale.

4. Layouts and formats

Formats affect how your message is consumed across placements and devices.

Carousel vs. single image.

Short-form vs. long-form video.

Text overlays vs. clean, visual-first creatives.

Testing formats helps you adapt your creative strategy to platform behavior rather than forcing one asset everywhere.

So how do you find which creative elements are actually winning?

This is where platforms like Segwise help. It connects creative elements (hooks, dialogs, visuals, formats, etc.) directly to business outcomes (ROAS, CPA/CPI, LTV, IPM, conversion rates), so teams stop guessing what works and start scaling creatives with data-backed confidence.

Moreover, with tag-level creative element mapping, you can discover patterns like "this hook appears in 80% of top-performing creatives" with complete MMP attribution integration.

Knowing what to test is important, but the real value comes from understanding why this process matters for performance at scale.

Why is Ad Creative Testing Important?

Without ad creative testing, UA teams end up scaling the wrong ads, react too late to fatigue, and repeat the same mistakes across campaigns. Instead of guessing what might work, creative testing gives you clear direction.

Here are the key reasons why creative testing is a must for UA teams:

Improve ROAS and overall efficiency: Testing different visuals, hooks, and messages shows you what actually resonates with users. This helps you allocate budget to creatives that drive stronger returns, rather than scaling what merely looks good.

Reduce creative fatigue before performance drops: Ongoing testing helps you spot when creatives start losing impact. Refreshing ads early prevents sudden dips in engagement, conversions, and ROAS.

Turn creative performance into data-backed insights: Creative testing helps you understand why certain ads work for specific audiences. These insights shape smarter creative briefs and stronger future campaigns.

Allocate budget more effectively: By identifying which creative elements drive results, you can confidently shift spend toward high-performing ads.

Stay competitive in crowded channels: Continuous testing keeps your campaigns fresh and adaptable. Instead of reacting late to market changes, you refine creatives proactively and maintain performance as competition increases.

In short, creative testing acts as a decision framework for user acquisition. It helps you build better ads, protect your budget, and drive more consistent performance as you scale.

Next, to see consistent results, you need a structured approach that turns insights into action.

Also Read: Ultimate Guide to Creative Testing for Mobile Game User Acquisition

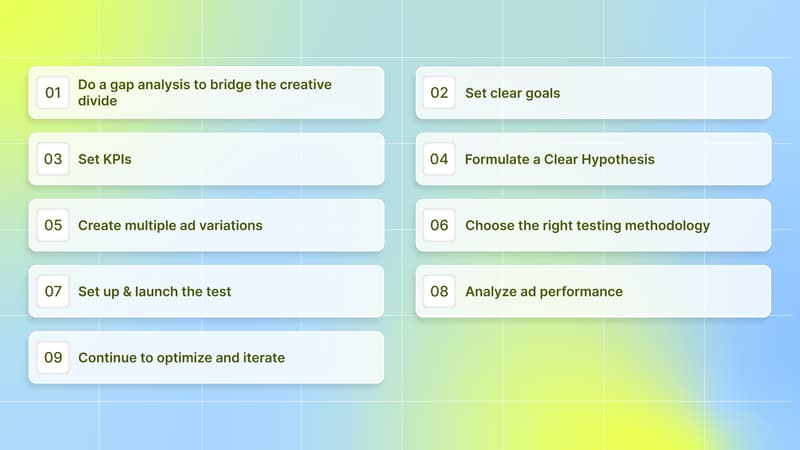

The Process of Creative Testing

The creative testing process is a structured way to test, learn, and improve your ad creatives over time. It helps you move from random experiments to intentional testing, so every test answers a clear UA question and supports better decision-making.

Here are the key steps in the creative testing process:

1. Do a gap analysis to bridge the creative divide

Creative testing starts with understanding where you are today. You review your current ads to spot gaps in formats, messaging, audiences, hooks, or platforms.

For UA teams, this means asking:

Are you overusing one format or angle?

Are certain audiences under-tested?

Are you spending the budget without clear creative learnings?

This step helps you identify what’s missing and where your next tests should focus, so your testing aligns with acquisition goals.

2. Set clear goals

Next, define your goal. Your goal should connect a creative change to a measurable user acquisition outcome.

For example, your goal might be to:

Improve early engagement for new video creatives.

Increase click-through rate by testing a new hook or CTA.

Lower CPA by changing messaging or visuals.

Attract higher-quality users who convert or retain better.

Clear goals keep your creative testing focused and ensure every test yields actionable insights rather than vague conclusions.

3. Set KPIs

Next, you need to align on which KPIs will determine success. Different creative elements influence different parts of the acquisition funnel, so not every test should be judged on the same metric.

Here are the most commonly used KPIs in ad creative testing for UA teams:

Impressions: Used to ensure fair delivery and baseline exposure across creative variants.

3-second video view rate: Helps evaluate the effectiveness of hooks and opening frames in paid video ads.

Average watch time: Indicates how well your creative holds attention beyond the first few seconds.

Click-through rate (CTR): Signals how compelling your message and CTA are at driving intent.

Cost per acquisition (CPA / CPI): Measures how efficiently a creative converts users into installs, signups, or purchases.

Cost per click (CPC): Useful for early-stage testing when conversion data is still limited.

Return on ad spend (ROAS): Used for scaling decisions to understand which creatives drive profitable growth.

The key is to select KPIs based on what you’re testing rather than judging every creative on the same metric.

4. Formulate a Clear Hypothesis

A hypothesis is the foundation of effective creative testing. It’s a clear, testable statement about how a specific creative change is expected to impact user acquisition performance.

Instead of running random experiments, a strong hypothesis gives your test structure and direction. It connects the creative change to a measurable outcome, so you understand why something worked, not just what happened. This turns creative testing from trial-and-error into a strategic, data-driven process.

A good hypothesis clearly states:

What you’re changing

What result do you expect

Which UA metric will be impacted

Examples of strong hypotheses:

“Customer testimonials will increase conversion rates.”

“Video ads will drive higher engagement than static images.”

“Emotional messaging will outperform feature-focused copy.”

“Showing pricing upfront will reduce drop-offs and improve conversions.”

When each test starts with a clear hypothesis, your results are easier to evaluate, act on, and scale across campaigns.

5. Create multiple ad variations

With clear goals and hypotheses in place, it’s time to turn your plan into creatives. Create multiple ad variations, each designed to test a specific creative element.

Focus on changing one element at a time, such as the hook, visual style, headline, or CTA, so you can clearly understand what drives performance. Avoid making too many changes in a single variation, as that makes results harder to interpret.

By testing controlled variations, you learn how different creative choices influence engagement, conversions, and user quality. Over time, this helps you build a repeatable creative strategy instead of relying on guesswork.

6. Choose the right testing methodology

Choosing the right testing methodology is critical to obtaining reliable, actionable insights from your creative tests. The method you use should match what you’re trying to learn and the level of confidence you need before scaling.

Here are the most common creative testing methodologies used by UA teams:

A/B testing (split testing): A/B testing compares two versions of an ad with one controlled change, such as a new hook, CTA, or visual. This method is ideal when you want to isolate the impact of a single creative element and understand exactly what caused a performance change.

Sequential testing: Sequential testing involves testing creative elements in a planned order rather than all at once. For example, you might first test CTAs, then visual styles, and then messaging tone. This approach helps you isolate which elements have the greatest impact on performance and keeps learning structured across multiple test rounds.

Multivariate testing: Multivariate testing evaluates multiple creative elements at the same time, such as combining different visuals, copy, and CTAs. This approach works best when you have sufficient volume and want to understand how different elements interact.

Dynamic creative testing: Some platforms (e.g., Meta and Google) support it. You can upload multiple creative assets (images, headlines, calls to action), and the algorithm will mix and match these creative elements. This approach can be more efficient at scale, but results still depend on the quality of input assets. It also requires close monitoring to ensure learnings are clear and aligned with your testing goals.

Lift testing: Lift testing measures the true impact of your ads by comparing users who saw the campaign with a control group that did not. This method helps you understand the incremental value your creatives add beyond organic or baseline performance.

Incrementality testing: Incrementality testing focuses on whether your ads are driving net-new outcomes rather than conversions that would have happened anyway. It’s especially useful when making high-stakes scaling or budget decisions.

Each methodology serves a different purpose. Choosing the right one ensures your creative tests deliver insights you can trust and act on confidently.

7. Set up and launch the test

Once your testing plan is clear, it’s time to execute. Set up your test so each ad variation receives fair, consistent exposure.

This includes aligning audience targeting, placements, budgets, and test duration across all variants. The goal is to ensure performance differences come from creative changes, not setup inconsistencies.

After everything is configured, launch your ad variations and let them run long enough to gather meaningful data. A clean setup at this stage is critical for getting results you can trust and act on.

8. Analyze ad performance

After launch, review performance against the goals and KPIs you defined earlier. This is where creative testing turns into actionable learning.

Use reports or dashboards to compare how each variation performed and identify patterns across hooks, visuals, messaging, and formats. Focus on understanding which creative elements drove results and which ones underperformed.

Which creatives performed best

Which elements drove results

Where performance dropped, or fatigue started

This analysis turns raw data into clear UA insights.

9. Continue to optimize and iterate

Creative testing doesn’t stop here. The real value comes from continuous optimization based on what you’ve learned.

Use insights from your analysis to refine winning creatives, improve underperforming ones, and design your next set of tests. This might mean refreshing hooks, adjusting messaging, or testing new formats before performance drops.

By repeating this process, you build a testing loop that keeps your campaigns fresh, reduces creative fatigue, and drives more consistent user acquisition results over time.

With the right testing foundation in place, it’s time to focus on strategies to turn learnings into scalable results.

Creative Testing Strategies to Scale High-Impact Ad Campaigns

High-impact ad campaigns result from disciplined creative testing, not volume or guesswork. To consistently improve user acquisition performance, you need strategies that help you learn faster, allocate budget wisely, and scale what works with confidence.

Here are the key strategies that make creative testing effective at scale:

1. Allocate a dedicated budget for creative testing

Creative testing requires a sufficient budget to produce reliable results. Tests that are underfunded often lead to incorrect conclusions and wasted effort.

A dedicated testing budget ensures that each creative variation receives fair exposure. This balance between testing new ideas and scaling proven ones helps you improve efficiency while continuing to innovate.

2. Allow tests to run long enough to gather stable data

Creative performance often fluctuates early in a test. Ending tests too quickly can lead to decisions based on noise rather than true performance trends.

Letting tests run for an appropriate duration helps ensure results are stable and actionable. This patience leads to better decisions and prevents premature scaling or rejection of creatives.

3. Refresh creatives proactively to manage fatigue

Creative fatigue is inevitable as campaigns scale. When the same ads are shown repeatedly, user attention drops and acquisition efficiency declines.

Refreshing creatives proactively helps you maintain performance and avoid sudden drops in results. By updating creative elements before fatigue becomes visible, you can sustain momentum, control costs, and keep campaigns performing consistently at scale.

But how do you know when a creative is starting to fatigue?

This is where AI-powered platforms like Segwise come in. Segwise’s fatigue-tracking detects a continuous decline in performance metrics and spend share using its internal logic and catches fatigue before it impacts your budget allocation and campaign results. You can also set custom fatigue criteria and monitor creative performance across Facebook, Google, TikTok, and all major ad networks, to catch fatigue before it impacts your ROAS.

4. Segment audiences during creative testing

Audience behavior varies across segments. Testing creatives across different audience groups helps you understand how performance differs by user type, geography, or funnel stage.

This strategy ensures insights are accurate and prevents overgeneralizing results from one audience to another.

5. Document learnings to compound results over time

Creative testing delivers long-term value only when insights are captured and reused. Documenting test outcomes, patterns, and conclusions prevents repeated experiments and speeds up future decision-making.

Over time, this creates a knowledge base that improves creative efficiency and consistency across campaigns.

6. Use automated tools to scale creatives efficiently

As creative volume grows, manual testing quickly becomes inefficient and error-prone. Managing multiple variations by hand makes it harder to ensure fair budget distribution, consistent setup, and accurate performance comparison.

Using automated tools helps remove this friction. Platforms like Segwise automate creative tagging and connect creative performance directly to UA outcomes across networks. This reduces manual workload and allows UA and creative teams to focus on analyzing insights, iterating faster, and scaling winning creatives with confidence.

7. Use clear naming conventions for creatives and tests

Clear naming conventions are essential when running multiple creative tests across platforms, formats, and audiences. Without a structured naming system, it becomes difficult to track performance, compare results, and extract reliable learnings.

By naming creatives consistently, you make analysis faster and more accurate. Clear naming also keeps UA, creative, and performance teams aligned, ensuring insights don’t get lost as creative volume scales.

When applied consistently, these strategies give clarity on what works, reduce wasted spend, and scale user acquisition with greater confidence and control.

Even with the right strategies in place, creative testing can fall short if a few common pitfalls creep into your process.

Common Creative Testing Mistakes to Avoid

Creative testing only works when it’s done with intent and discipline. Many UA teams test frequently but still struggle to improve performance because small mistakes weaken results and lead to unclear learnings.

Here are the most common creative testing mistakes you should avoid:

Launching tests without a clear goal or hypothesis, leading to results that are hard to interpret or act on.

Ending tests too early before performance stabilizes, leading to decisions based on short-term fluctuations rather than real trends.

Underfunding creative testing results in low exposure and unreliable conclusions.

Judging every test on the same KPI, even when different creative elements impact different stages of the acquisition funnel.

Scaling creatives based on early spikes without checking for sustainability or fatigue risk.

Failing to document test results leads to repeated experiments and lost learning over time.

Treating creative testing as a one-time activity instead of a continuous optimization process.

Avoiding these mistakes helps you turn creative testing into a reliable system that improves user acquisition performance, protects your budget, and supports consistent scaling.

Also Read: How to Combat Creative Fatigue with AI Solutions

Conclusion

Creative testing works best when it’s treated as a system, not a one-time task. A structured approach helps you understand what drives performance, reduce guesswork, manage creative fatigue, and make confident scaling decisions. By focusing on the right elements, running disciplined tests, and continuously iterating on learnings, you can turn ad creative testing into a repeatable engine for user acquisition growth.

As creative volume and complexity grow, managing this process manually becomes harder. This is where AI-powered platforms like Segwise come.

Segwise is an AI-powered creative analytics and generation platform that helps UA and performance marketing teams understand which creative elements drive performance, when creatives start fatiguing, and where budgets should shift before results drop.

Our powerful, multi-modal AI creative tagging automatically identifies and tags creative elements like hook dialogs, characters, colors, and audio components across images, videos, text, and playable ads to reveal their impact on performance metrics like IPM, CTR, and ROAS. With fatigue detection, you can catch performance decline before it impacts budget allocation and campaign results.

Moreover, with our tag-level performance optimization, you can instantly see which creative elements, themes, and formats drive results across all your campaigns and apps. With AI creative generation, you can auto-generate new ad creative variations using creative elements that drive performance - winning hooks, CTAs, visual styles, and characters identified through your creative insights.

So, why wait? Start your free trial and see how it helps you scale winning creatives and drive high-impact ad campaigns with confidence!

Comments

Your comment has been submitted