How to Find Winning Creatives and Use AI to Maximize ROAS

You need winning creatives that keep ROAS (return on ad spend) high, but rising costs and short attention spans make that harder every day. For mobile app marketers, guessing which ad will perform well is no longer enough.

AI can break ads into components, such as hooks, CTAs, visuals, and link these components to key metrics, including installs, retention, and revenue. This helps you avoid wasting budget on ads that only appear effective on surface metrics, allowing you to focus on creatives that deliver sustained, long-term growth.

In this blog, we’ll explain how to establish a repeatable creative cycle, test ideas faster, capture clean data, and use AI to identify and scale high-performing creatives that maximize ROAS.

Winning Creatives with AI: Building a Repeatable Creative Cycle

Here’s a step-by-step approach for producing successful creative work consistently: Research → Ideation → Production → Testing → Scaling/Iterating → Restart.

Each phase builds on the last:

Research: Gather user signals, benchmark formats, and identify top-performing hooks in your category. This research tells you which formats and emotions to try first.

Ideation: Generate numerous low-effort concept variants (ideas for hooks, opening shots, CTAs, claims) to surface early performance signals or to surface initial engagement signals.

Production: Create lightweight MVP assets (short-form clips, template-based edits, user testimonial snippets) that are cost-effective and quick to produce.

Testing: Run controlled A/B or pre-launch tests against a baseline, capture metric-level signals (CTR, view-through rate, installs, early retention), and select winners based on lift relative to the baseline.

Scaling / Iterating: Increase spend on validated winners, create new variants based on the winning creative’s structure, retire fatigued versions, and prepare winning versions for broader distribution.

Restart: Repeat the cycle continuously as ad creatives age, user preferences change, and new competitors appear.

Running this cycle intentionally makes creative discovery repeatable rather than accidental.

How Many Concepts Should You Begin With?

The goal is to surface signals quickly, rather than perfecting a single ad.

Prioritize volume over polish initially: Launch multiple minimum viable products (MVPs). Each MVP should test one big idea (hook, offer, or visual).

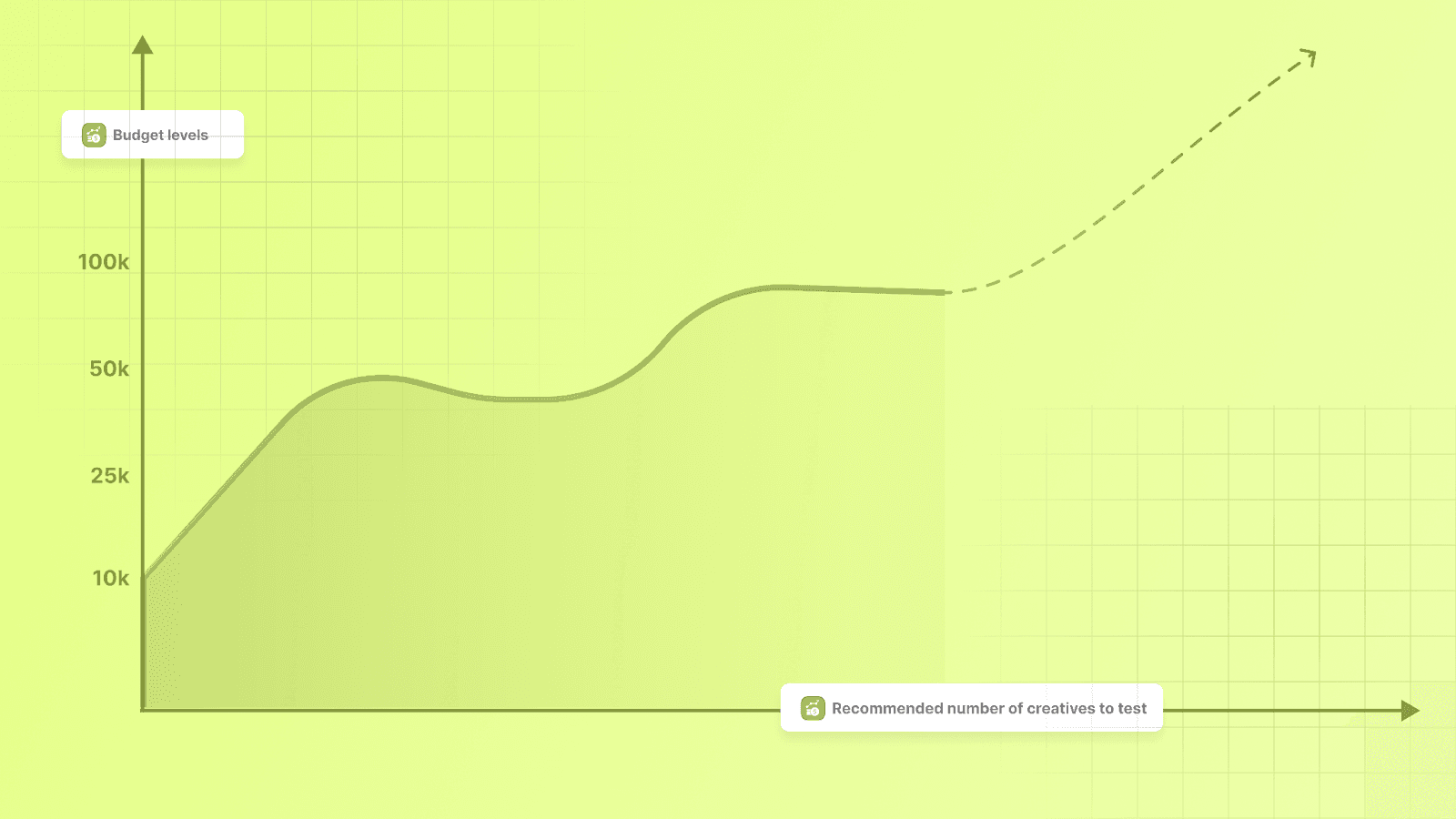

A quick rule of thumb: Aim to test dozens of new creative variations each month for mid-size budgets. A common rule many teams use is roughly 50 new creatives per $25,000 in monthly ad spend, adjusting the volume up or down based on the budget. This increases the chance of surfacing true signals rather than false positives.

How to allocate effort: 70% of early assets should be quick, inexpensive cuts (15 to 30 second videos, carousel stills, short captions). Reserve high-cost production for concepts that pass an initial signal test.

Signal thresholds: Don’t declare a winner on impressions alone. Measure increases in conversion rate and reductions in CPA relative to baseline, plus retention or 7-day ROAS, before scaling. Use multiple performance signals (short-term install metrics and early retention) to identify winners.

More MVPs increase the chance of finding a strong creative. Keep production light until the data shows it’s time to scale.

Capturing Creative Learnings: Naming, Cataloging, and AI Readiness

The goal is to create data that is both searchable and consistent, enabling you to scale top-performing creatives, integrate with automation, and eventually feed AI models.

1. Naming Conventions (Practical Template + Rules)

Consistent naming allows you to filter tests, locate versions, and train models more efficiently. Use this simple, practical template:

PLATFORM_OBJECTIVE_AUDIENCE_FORMAT_CONCEPT_V#_DATE

Example: FB_IAP_LOOKALIKE_VIDEO_LEVELUP_V3_20250901

What each token means:

PLATFORM: FB / IG / TikTok / SNAP

OBJECTIVE: INST, CVR, IAP, ROAS

AUDIENCE: LLK20, RETARGET_7D, ANDROID_US

FORMAT: VIDEO_15s, CAROUSEL, STATIC_1x1

CONCEPT: 1–3 word idea tag (LEVELUP, SOCIALPROOF, DEMO)

V#: version number

DATE: YYYYMMDD (upload or test date)

Rules:

Use no spaces; separate words with underscores.

Keep concept tags consistent across tests.

Store the naming standard in a one-page SOP and share it with the ad ops, creative, and analytics teams.

Following this strict template accelerates scaling and ensures consistency across projects.

2. Asset Cataloging and Taxonomy

Minimum metadata to capture for every creative:

Title (human-readable) and canonical name (per naming convention)

Upload date

Creative type and length (format), language, aspect ratio

Hook timestamps (for video, mark 0–3s hook), CTA presence, and type

Test IDs (campaign/ad set/test cell IDs), target audience, geo, platform

Performance KPIs: impressions, CTR, installs, CPA, 7-day retention, ROAS

Labels: WINNER / LOSER / INCONCLUSIVE with date-of-decision

Creative attributes/tags: emotional tone (joy, urgency), social proof, demo, UGC, brand logo presence

Where to store this:

Use a digital asset management (DAM) system or a cloud-based asset library with spreadsheet/DAM metadata.

Following standard taxonomy and metadata practices helps teams quickly locate assets and prepare datasets for AI.

3. Versioning and Provenance

Keep original files and any derivative exports (e.g., original.mov + fb_15s_v1.mp4).

Track who exported each version and when it was exported.

Include a brief annotation describing the test result and hypothesis

These small rules save time and prevent confusion as your creative library grows.

4. Prepare for AI

Capture the following now to make your library AI-ready:

Rich, structured metadata (see list above) so AI models can clearly distinguish winners from losers.

Transcripts and screenshots of video assets as text are easier for models to process.

Raw performance timelines (daily KPIs) to help models learn patterns like fatigue curves and scaling behaviors.

Creative-element segmentation (hook frame, opening caption, voiceover text, music presence/absence). This enables generative or scoring models to identify which elements drive lift.

Providing structured, high-volume creative datasets maximizes the utility of AI.

5. Capturing Learnings in a Lightweight Playbook

Maintain a living document tracking top-performing formats and the core reasons they were effective (hook, visual, offer). Link playbook entries to the exact asset names and tests for quick replication.

Make your creative data tidy, labeled, and versioned now; it grows in value as you scale and hand it off to automation or AI.

Suggested Watch: Build custom metrics in Segwise. See how Segwise turns naming conventions and tags into trackable, actionable metrics in minutes via its no-code dashboards and tag extraction.

Identifying True Winning Creatives via Performance Signals

Begin by establishing a process that distinguishes short-term traction from durable performance.

1. Delivery vs Performance: Why High Delivery Doesn’t Always Equal a Winner

A creative that gets most of the impressions or budget isn’t automatically the winner. Platforms often funnel delivery toward a small set of ads. Check whether that delivery leads to better downstream business outcomes, such as retention, lifetime value (LTV), and ROAS. If those downstream metrics don’t improve, the ad is merely gaining exposure, not attracting profitable users.

Quick check: When an ad dominates delivery, compare its cohort metrics (installs, Day 1 and Day 7 retention, first-purchase rate, and LTV) with those of other creatives running at a similar scale.

2. Essential KPIs to Evaluate: What You Must Track

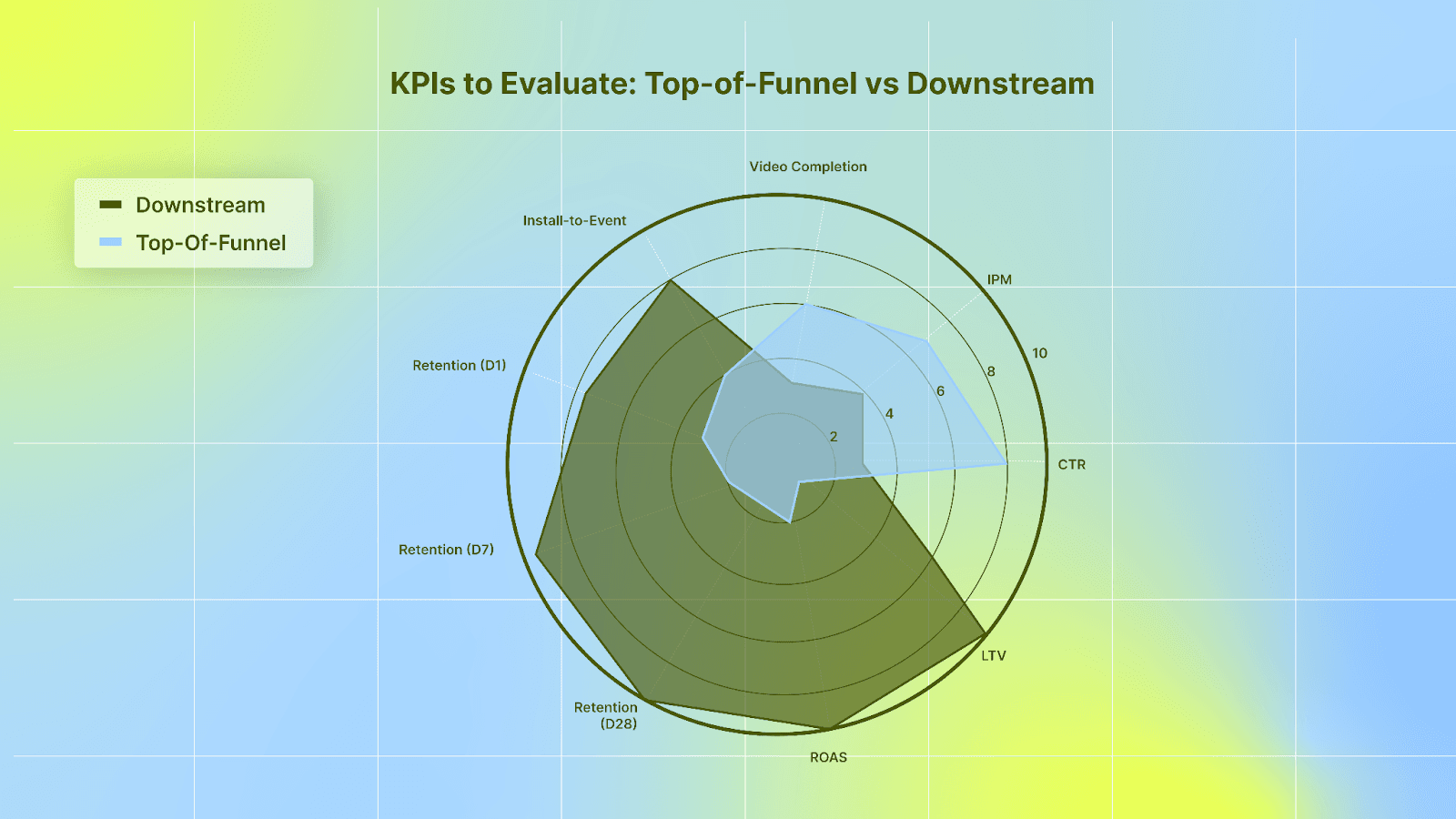

Track both top-of-funnel and downstream numbers. Focus on:

ROAS: measured over the right window for your app’s monetization curve.

Retention (D1, D7, D28): Indicates whether users remain engaged.

LTV: long-term revenue per user; the true profit signal.

CTR: attention signal; good for creative testing but not sufficient alone.

IPM (installs per mille): Useful for comparing creatives on cost to install.

Install-to-event rates: conversion from install to key in-app action.

Video completion, hook and hold metrics: Measures how quickly the creative grabs attention and how long it holds it.

Use a mix of these KPIs to judge an asset, not a single metric. Advanced creative analysis pairs element-level data (hooks, scenes, narration) with performance to learn what specifically drives results.

3. Scalability Test: Verify a Winner at Increased Spend, Across Networks, and in New Geographies

Follow a controlled scaling path:

Maintain a baseline control group: Keep a control audience or campaign as a benchmark for comparison.

Ramp spend in stages: Move from low to moderate to high daily budget steps. Watch ROAS and CPI at each step.

Cross-network test: Move the creative to a different network or placement, while maintaining targeting of similar audience segments. See if performance holds.

Geo expansion test: Launch in a nearby or similar market first. If metrics hold, expand further.

Compare downstream cohorts: Confirm retention and LTV stay stable after scaling.

Stop on threshold breach: If ROAS or retention falls below your guardrails, pause and investigate the issue.

These steps reveal whether the creative’s initial success was platform-specific or truly generalizable.

If the creative keeps ROAS, retention, and LTV healthy through these checks, you have a true winner worth scaling.

Scale Winning Creatives Without Sacrificing ROAS

Once you’ve confirmed a creative’s strength, focus on scaling it without losing efficiency.

Expand Reach

Move beyond the initial setup by:

Testing new networks, ad placements, and broader audiences.

Entering new geographies with localized adjustments.

Refinement Loop

Treat your winner as a base version and polish it for longevity:

Localize text, voiceovers, and visuals.

Adapt aspect ratios and lengths for different placements.

Upgrade production values for brand consistency.

Create variants by recombining proven hooks and elements to delay fatigue.

Tactical Scaling Checklist

To manage growth safely, keep a disciplined plan:

Define a clear budget ramp schedule with checkpoints.

Maintain control groups to compare against the baseline.

Set monitoring thresholds for ROAS, CPI, and retention, along with corresponding alerts.

Track element-level performance to refresh assets proactively before performance declines.

Utilize AI tools to tag which creative elements (hooks, scenes, captions) should be reused or replaced.

Scaling isn’t just about spending more; it’s about protecting performance, refreshing at the right time, and maintaining a strong ROAS as reach expands.

Also Read: Building A Winning Creative Strategy: A Step-By-Step Approach

Pinpoint High-Impact Elements in Winning Creative

After confirming a creative is a winner, break it down into parts to keep what's working and reuse it.

What to Examine (And What Each Tells You)

Hook/opening frames: The first 1–3 seconds. Measure how quickly attention is captured and whether viewers stay engaged. A weak hook loses viewers before the message starts.

Product shots: Clearly and prominently display the product or key feature. High-quality product shots enhance install-to-event rates when they align with the app’s core value.

Voiceover & audio cues: Tone, pacing, and messaging clarity affect comprehension and conversion. Audio changes can affect completion and retention.

Text overlays/captions: Readability, timing, and message hierarchy impact users who watch muted content. Test short, clear overlays that match the voiceover.

End cards & CTAs: The final frame prompts the viewer to take action. Variations in CTA copy, size, or timing influence conversion and ROAS.

Formatting & aspect: Aspect ratio and pacing for each placement (feed, rewarded videos, interstitial) influence completion and hook metrics.

Map Metrics to Elements (How to Know Which Part Drove the Result)

Hook rate: The percentage of viewers who reach the second 2–3 frames of the creative, which corresponds to the opening frames and hook effectiveness.

Frame-level engagement: Which frames retain users? Use heatmaps or time-based retention to spot drop points.

Completion & watch-time: Indicate storytelling and product clarity.

Install-to-event and retention deltas: Compare cohorts exposed to different element variants to see which elements deliver higher LTV or retention rates.

Asset Taxonomy & Naming Conventions: Why It Matters When AI Is in the Stack

Use consistent, element-level names so teams and AI tools can find and compare parts quickly. Example pattern:

APP_CAMPAIGN_CREATIVE-HOOK[A/B]_SHOT[01]_VO[EN]_ASPECT9_16_v1

Tag each asset with fields: hook ID, shot type, CTA variant, locale, aspect ratio, and production level.

Benefits: faster search, cleaner datasets for AI models, reliable training labels, and simpler recombination. Well-named assets let automated tools score and prioritize variants without manual cleanup.

With element-level metrics and a strict naming system, you can pinpoint what truly moves ROAS and feed those signals straight into your testing and AI pipelines.

Recombine Elements for More Wins

Instead of replacing a full creative, run controlled swaps of individual elements to find higher-performing mixes while protecting the original synergy.

Design Controlled Variants

Change one element at a time (hook, background, CTA, color palette) to isolate impact. Keep everything else identical.

Use a test matrix for efficient coverage: Rows represent hooks, columns represent end cards, and the metric is ROAS delta (or retention delta). This displays interaction effects without increasing the test count.

Start narrow: Test top 2–3 hooks against top 2 end-cards (4 to 6 variants). Expand only when a pattern emerges.

Recombine vs. Replace: Avoid Killing the Synergy

Preserve the established anchor: If a hook is the core driver, retain it when testing new bodies or end cards. This maintains the creative’s effectiveness.

When recombining, treat the creative as a system: Some elements amplify one another. If a recombination drops performance, revert and test a different combination rather than abandoning the original.

Use staged rollouts: Test recombinations on a small spend or holdout segment first, then ramp if ROAS and retention hold.

Example test matrix:

Rows = Hook A, Hook B, Hook C

Columns = Endcard 1, Endcard 2

Cells = Variant (Hook X + Endcard Y)

Metric = change in ROAS vs. baseline; secondary metrics = install-to-event, D7 retention

Operational Tips for Reliable Results

Maintain control (the original winner) in all tests to accurately measure actual lift.

Run each variant long enough to gather stable cohort-level downstream metrics (not just clicks).

Use element tags and a consistent naming convention. This allows AI scoring and dashboards to automatically aggregate results by hook, shot, VO, or CTA.

Recombine selectively, measure accurately, and let element-level wins accumulate. That’s how small changes turn into sustained ROAS gains.

Use AI to Boost ROAS

Mobile app marketers and marketing teams should use AI to convert element-level creative signals into informed decisions that protect and grow ROAS.

What AI Actually Does for Creatives

AI breaks creative assets into measurable pieces. Computer vision splits videos into frames and scenes. Natural language processing (NLP) reads on-screen text and transcribes voiceovers, making meaning and sentiment available as data.

Models then connect the presence or timing of specific elements like a hook, product shot, or CTA to performance metrics such as install-to-event rates, retention, or ROAS.

Layered Analysis: How the Pipeline Works in Practice

Scene classification & frame segmentation: The asset is cut into the logical units (hook, demo, close).

Text & audio extraction: overlays, captions, and voice tracks are transcribed and labeled.

Element tagging: objects, faces, logos, and other visual elements are detected and tagged.

Join with performance data: Element-level tags are matched to MMP or analytics cohorts, allowing models to identify which frames or lines correlate with higher LTV or ROAS.

This layered view enables testing not only entire creatives but also the exact moments that drive value.

Practical AI Advantages for Performance Teams

Label at scale: AI removes manual tagging bottlenecks, producing consistent element labels across thousands of assets.

Generate many variations cost-effectively: Generative engines can create localized and small variants, allowing teams to focus their human production time on higher-value work.

Predict promising variants: Models score combinations by expected uplift, so tests focus on high-probability wins rather than random guesses.

These capabilities enable teams to run fewer low-value tests and focus their budget on variants that matter.

Recommended Workflow & Data Flows

Instrument & tag assets at creation time: Keep tags minimal (hook ID, language, placement type) so AI models can process them efficiently.

Export performance data from your MMP or analytics platform (installs, revenue, retention cohorts) into the AI tool.

Run element-level attribution: Use the joined dataset to measure which scenes, hooks, or overlays correspond to real downstream lift.

Auto-generate variants and rank them by predicted uplift: Let the model propose the top 3–5 variants based on expected ROAS improvement.

Run targeted A/B tests on top predictions: Test on holdout segments and measure cohort-level retention and LTV, not just clicks before broad rollout.

Tooling Notes (What to Use)

Creative analysis platforms (computer vision + NLP): Platforms like Segwise, VidMob, and CreativeX use computer vision and language models to tag creative elements (often frame-by-frame), detect on-screen text, and identify audio or voiceover signals, enabling teams to connect element-level tags to campaign performance.

MMPs / analytics (trusted downstream metrics): AppsFlyer, Adjust, Branch, and Singular export installs, events, retention, and revenue cohorts from these platforms into your analysis pipeline.

Creative generation engines (fast, localized variants): Runway, Synthesia, Adobe Firefly, Canva, and Pictory generate or edit video and image variants that are specific to format and language at scale for high-confidence testing.

When AI is connected to clean performance data, marketing teams can stop guessing which creative elements are successful. They can understand which element drove the win and scale the parts that boost ROAS.

Also Read: Top Creative Analytics Tools for Successful Ad Campaigns 2025

Detect Creative Fatigue Early & Refresh with Precision

Mobile app marketers and marketing teams need clear signals and a practical refresh plan to keep creatives driving ROAS.

Fatigue Signals to Watch

Falling CTR or IPM: Steady drops compared to the recent baseline are the first signs.

Lower delivery share with rising CPM: Platforms may still show the ad, but at higher prices and to less valuable users.

Declining video completion or watch time: Viewers leave earlier, suggesting weaker creative hooks or repetition.

Rising frequency and onboarding drop-offs: The same people see the ad too often, resulting in lower conversion rates at install or first use.

A practical detection method is comparing short windows against a rolling baseline (for example, 7-day vs 28-day). Many teams consider a sustained decline of ~10–20% in CTR or IPM week-over-week as a trigger to take action.

Frankenstein Refresh vs Full Replacement (How to Decide)

Try a Frankenstein refresh when the decline is moderate and element-level signals still appear healthy (early hook performance is strong, and completion is only slightly down). Swap or recombine high-value elements such as the hook, end card, or CTA and test those variants. This preserves any existing synergy.

Choose full replacement when core engagement (hook rate, completion) declines or downstream metrics (D7 retention, LTV, ROAS) drop significantly. A completely new concept is the cleaner reset, when the entire creative system no longer delivers.

Operational rule: Always A/B test refreshes against the original winner on a holdout segment before rolling out to the broader audience. Use cohort-level downstream metrics (retention, revenue) as the primary success signals, not only clicks.

Suggested Watch: Catch creative fatigue early. Learn how to spot fatigue signals before spending is wasted and decide whether to refresh or replace.

Parallel Test Strategy

Maintain a continuous testing pipeline that allows one team member to create and assess variants regularly. Quickly document insights and eliminate ineffective options.

Reserve a steady testing budget (many teams use roughly 5–15% of media spend or follow a 70/20/10 split where ~10% funds new experiments). This prevents reliance on a single creative and shortens the time to find the next winner.

Start with focused element swaps (e.g., hooks, CTAs), measure cohort outcomes, and then expand the most promising variants. Keep a control always running.

Monitor the signal set, run targeted Frankenstein swaps first, and then move to full replacements when core metrics collapse. Keep a small, steady experiment engine to avoid performance gaps and protect ROAS.

Also Read: Creative Optimization in 2025: Actionable Insights Report

Conclusion

Finding winning creatives relies on process, not luck. Test many low-cost ideas quickly and evaluate by retention, lifetime value, and ROAS. Use a simple tracking system for names, tags, and versions. Break creatives into parts, such as hooks, captions, and CTAs, to test without losing successful elements. Keep testing budgets active and monitor fatigue to refresh before performance drops.

Segwise helps with this by using Creative AI agents to tag images, videos, and playables and extract element-level tags such as hooks, captions, characters, dialogues, and audio. These tags can be joined with campaign and MMP data, enabling teams to run creative analytics and tracking, and link element presence to metrics such as ROAS, CTR, CPA, and IPM.

For data and clear examples that support the steps above, read Segwise’s reports: Winning Patterns, Winning Creative Patterns in DTC, and Winning Patterns in Playable Ads.

Segwise supports launch tracking for new creatives and provides a 14-day free trial with no credit card or engineering work required.

FAQs

1. Can AI fully replace your creative team?

No. AI accelerates tagging, variant generation, and scoring, but humans remain essential for strategy, brand voice, creative approval, and making cultural or legal judgments.

2. Is it safe to share campaign or performance data with AI vendors?

It can be done with safeguards: share aggregated or hashed identifiers, use vendors that integrate with your MMP, require a data-processing agreement, limit retention and access, and review privacy/security documents before connecting live data.

3. How do you get leadership to fund higher creative testing?

Run a short pilot with a clear hypothesis, control and treatment groups, defined KPIs (e.g., D7 retention, ROAS), and a small budget. Deliver concise results, a projected ROI, and name an executive sponsor to shorten approval cycles.

4. What should you look for when evaluating an AI creative tool?

Seek transparent model outputs, reliable element-level tagging, native MMP/analytics integrations, API and export options, a trial/onboarding path, and documented security controls (DPA, encryption).

5. How soon can you expect AI-driven suggestions to move ROAS?

Tagging and early signals can appear within days of setup if data is connected. Demonstrating reliable cohort-level uplift (D7 retention or ROAS) typically requires several weeks and enough installs per variant to be statistically meaningful.

Comments

Your comment has been submitted