A Beginner's Guide to A/B Testing Ads for Optimization

In mobile gaming, every ad impression counts. But, how do you ensure your ads are not just seen, but also acted upon? With millions of games competing for players' attention, standing out requires more than just flashy visuals; it demands precision. That's where A/B testing comes in.

By comparing different versions of your ads, you can identify which elements resonate most with your audience, leading to better user acquisition outcomes. So, are you also struggling to pinpoint why some ads perform better than others? You may be unsure which creative elements drive installs or how to refine your campaigns for optimal results.

This blog covers the fundamentals of A/B testing in mobile game advertising, offering step-by-step guidance to let you systematically test and optimize your ad creatives. By the end, you'll be equipped to make data-driven decisions that enhance your user acquisition strategies.

What is A/B Testing in Mobile Gaming?

A/B testing, also known as split testing, is a method where two versions of an ad are shown to different user segments to determine which performs better in terms of user acquisition metrics.

For example, you might test two variations of an ad: one with a dynamic call-to-action and another with a static one, to see which drives more installs. This approach enables you to make data-driven decisions, optimizing your ad creatives for better performance.

Relevance to User Acquisition

In mobile gaming, attracting new players is crucial. Hence, you can use A/B testing to optimize your ad creatives by systematically evaluating different variations to see which resonates most with your target audience. By understanding what drives more installs, you can refine your user acquisition strategies, reduce cost per install (CPI), and ultimately grow your player base more effectively.

To further understand the value of A/B testing, let's explore how it directly benefits your mobile game user acquisition efforts.

Benefits of A/B Testing Ads for Mobile Game User Acquisition

A/B testing ads helps you refine your user acquisition strategy by making data-backed decisions that improve ad performance. It ensures your creatives are optimized for higher engagement, leading to better conversion rates and a stronger user base.

Here are some key benefits of A/B testing ads for mobile game user acquisition:

1. Data-Driven Decision Making

In mobile gaming, making assumptions about what works can lead to wasted resources. A/B testing enables you to base your decisions on actual user behavior rather than depending on guesswork. By comparing different versions of your ads, you can identify which elements resonate most with your audience, leading to more effective user acquisition strategies.

2. Optimized Ad Creatives

Regular A/B testing refines your ad creatives to ensure they capture the attention of potential players. By testing variations in visuals, messaging, and calls to action, you can determine which combinations drive higher installs of your game. This continuous optimization process ensures that your ads remain relevant and appealing to your target audience.

3. Improved Conversion Rates

Testing different ad variations can lead to higher conversion rates by identifying what drives user action. For instance, a specific call to action or visual style can lead to more installations. Implementing these insights across your campaigns can significantly increase the number of users who take the desired action, such as installing your game.

To effectively implement A/B testing and optimize your ads, it’s crucial to follow a structured process. Here’s a step-by-step guide to help you get started.

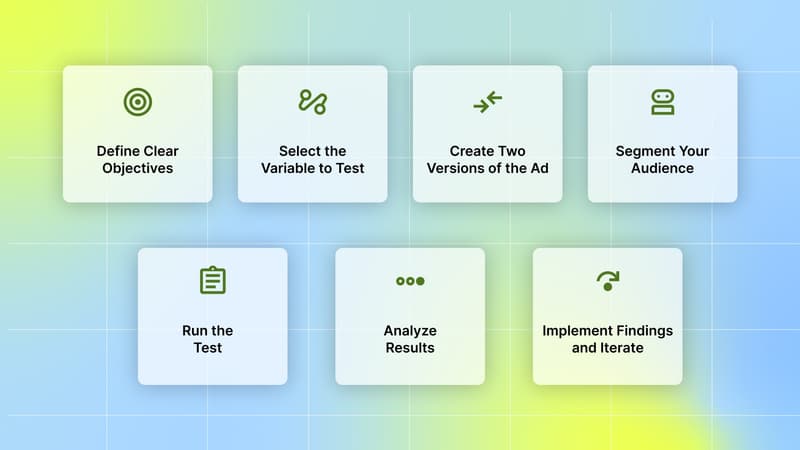

Step-by-Step Guide to A/B Testing Ads

A/B testing involves systematically testing different ad variations to determine which one resonates best with your audience. By following a clear process, you can make data-backed decisions that improve user acquisition. Here are the steps to successfully run A/B tests on your mobile game ads:

1. Define Clear Objectives

Before running an A/B test, it is crucial to define your goals clearly. What exactly are you trying to achieve with your ads? Ask questions like:

Are you looking to boost the installation rate of your game?

Is your primary focus improving the Click-Through Rate (CTR) on your ad?

Do you want to lower your Cost Per Install (CPI)?

You need clear, measurable objectives to evaluate the success of your test. For instance, if you want to improve installs, you may need to focus on the elements that drive conversion, such as the creatives or call-to-action buttons.

Why this step matters: Without a clear objective, you won’t know how to measure success, and your test results could be ambiguous. This first step sets the foundation for the entire testing process, ensuring you're focused on what matters most.

2. Select the Variable to Test

When conducting A/B testing, it's crucial to focus on a single element of your ad to isolate its impact on performance. Testing multiple variables simultaneously can confound results, making it difficult to determine which change led to observed differences.

Here are key elements you might consider testing:

Hook

The hook is the initial element that grabs the player's attention. It's often the first few seconds of a video ad or the opening line of a static ad. Testing different hooks can reveal which messaging resonates most with your target audience. For instance, compare "Unleash epic battles now!" with "Embark on a legendary adventure today!"

Ad Copy

Headlines: Experiment with different headlines to see which captures attention more effectively. For example, compare "Discover Your New Favorite Game" with "Join the Battle Now."

Descriptions: Vary the tone and content of your descriptions. Test a straightforward description like "A fast-paced action game" against one that highlights unique features, such as "Experience epic battles and unlock legendary heroes."

Messaging Style: Evaluate the impact of various messaging styles, including formal versus casual and urgent versus informative.

Call-to-Action (CTA) Buttons

Text Variations: Test different CTA phrases, such as "Install Now," "Play Free," or "Start Your Adventure," to determine which prompts higher engagement.

Placement: Experiment with the positioning of the CTA button within the ad to determine if its location affects player engagement.

Design Elements: Vary the color, size, and shape of the CTA button to identify which design attracts more clicks.

Visual Elements

Images: Compare static images with dynamic ones, or test different types of visuals, such as character-focused images versus gameplay scenes.

Videos: Assess the effectiveness of different video lengths, styles, and content. For instance, test a short teaser clip against a longer gameplay trailer.

Graphics: Experiment with various graphic styles, such as minimalist designs versus detailed illustrations, to see which resonates more with your audience.

Target Audience Segments

Demographics: Test how different age groups, genders, or locations respond to the same ad.

Behavioral Segments: Segment your users based on behaviors, such as previous gaming habits or app usage patterns, to tailor your ads more effectively.

Device Types: Evaluate how users on various devices (e.g., smartphones vs. tablets) interact with your ads.

Ad Formats

Static vs. Dynamic: Compare the performance of static ads against dynamic ad formats.

Playable Ads: Test the effectiveness of playable ads, where users can try a mini-version of the game, versus traditional video or image ads.

Interstitials vs. Banners: Evaluate the performance of full-screen interstitial ads compared to smaller banner ads to determine which yields better results.

Why This Step Matters: Focusing on one variable at a time ensures that any observed changes in performance can be directly attributed to that specific element. This approach provides clear insights into which aspects of your ad resonate most with your audience, allowing for targeted optimizations. By systematically testing and refining individual elements, you can enhance the overall effectiveness of your user acquisition campaigns.

3. Create Two Versions of the Ad

Now that you’ve identified your test variable, it’s time to create two versions of your ad:

Version A (Control): This is your original ad, which serves as the baseline for comparison.

Version B (Variant): This is the modified version of your ad with the change you’re testing.

Why this step matters: The only difference between the two versions should be the variable you're testing. For example, if you're testing CTA buttons, the only difference should be the wording or design of the button. Having a controlled version (Version A) and a modified version (Version B) ensures that you’re testing one thing at a time and making the right comparisons.

4. Segment Your Audience

Now, it’s time to split your audience into two distinct groups:

Group A: Exposed to Version A of the ad (control group).

Group B: Exposed to Version B of the ad (variant group).

Your audience must be randomly divided into these two groups to ensure unbiased results. Both groups should be similar in size and demographics to get an accurate comparison.

Why this step matters: Audience segmentation is key for getting unbiased data. If you don't segment randomly, one group may have a higher-quality audience, which can skew results. Random segmentation ensures that each group is representative of your overall audience, leading to more reliable results.

5. Run the Test

Launch both versions of the ad simultaneously. This ensures that the performance metrics are not influenced by external factors such as time of day, day of the week, or other seasonal variations.

Monitor the performance of both ads over a sufficient period to gather meaningful data. The length of the test should be long enough to capture diverse user behaviors, but not so long that external factors could start influencing the results.

Why this step matters: Running both ads simultaneously removes time-based biases. If one version is shown at a different time, it could lead to inaccurate conclusions. Ensuring both ads are live for the same period helps make sure your results are driven by the ad itself, not external factors.

6. Analyze Results

Once your A/B test concludes, it's time to scrutinize the performance of both ad versions. Key performance indicators (KPIs) to focus on include:

Install Rate: Which version attracted more installs?

Click-Through Rate (CTR): Which ad garnered more clicks per impression?

Cost Per Install (CPI): Which version delivered installs at a lower cost?

Return on Ad Spend (ROAS): Calculate the revenue generated for every dollar spent. A higher ROAS indicates more efficient ad spend and better profitability.

To ensure the reliability of your findings, it's crucial to apply statistical significance tests. Statistical significance enables you to determine whether the differences between your ad versions are likely due to the changes you made, rather than occurring by random chance.

For example, aim for statistical significance based on your baseline conversion rate and desired confidence level, typically testing with 100-500 installs per variant. This ensures you have sufficient data to assess the performance of each ad with confidence.

You can also use tools like Optimizely's sample size calculator to help you determine the appropriate threshold for your lift expectations. This will help you determine if the observed improvements in installs, CTR, or CPI are statistically significant and not merely fluctuations.

Why This Step Matters: Proper analysis helps identify which ad elements resonate with your audience, guiding future creative decisions. Without rigorous analysis, you risk misinterpreting data, leading to ineffective optimizations.

7. Implement Findings and Iterate

After analyzing the results, implement the insights gained from the test into your future campaigns. For example:

If Version B with a new CTA button drove more installs, use that CTA button in your future ads.

If Version A with specific visuals performed better, consider incorporating similar visuals in your next set of ads.

The key to ongoing success is continuous iteration. A/B testing isn’t a one-time effort; it’s a continuing process. Once you’ve applied the insights from one test, set up your next test to continue improving your campaigns.

Why this step matters: Continuous testing and iteration enable you to optimize your ad creatives over time. Mobile game user acquisition is a highly competitive field, and ongoing optimization helps you stay ahead by continuously refining what works.

To take your A/B testing to the next level, integrating advanced AI-powered tools like Segwise can provide deeper insights and streamline your analysis.

Also Read: Analyzing And Measuring Campaign Performance Metrics And Strategies

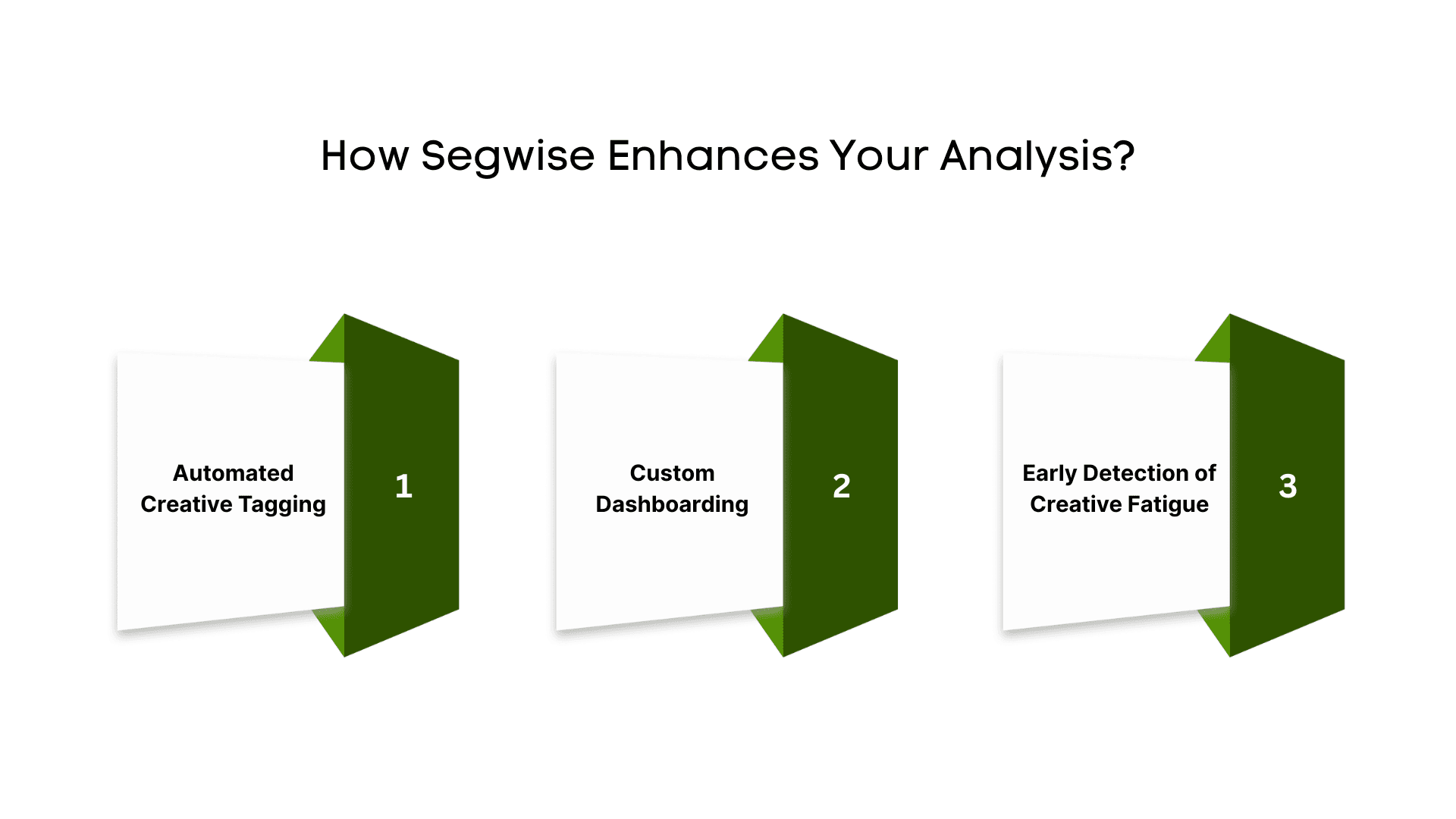

How Segwise Enhances Your Analysis

Segwise's Creative Analytics AI Agent revolutionizes how you analyze ad creatives by automating tagging and providing deep insights across various ad formats. Here's how it enhances your analysis:

Automated Creative Tagging: Segwise's AI creative agent automatically tags elements like backgrounds, calls-to-action, emotional hooks, and more in your ad creatives. This automation eliminates the need for manual tagging, saving a significant amount of time and reducing human error.

Custom Dashboarding: The platform integrates data from multiple ad networks and MMPs, offering a unified view of your creative performance. This integration enables you to create custom reports and dashboards to track the effectiveness of your ads and make data-driven decisions.

Early Detection of Creative Fatigue: The creative AI agent monitors your creatives over time, identifying signs of creative fatigue before performance declines significantly. This proactive approach enables you to refresh your ads promptly, ensuring optimal performance.

By incorporating Segwise into your ad strategy, you can streamline your creative analysis process, make data-driven decisions, and ultimately enhance your user acquisition outcomes.

To further illustrate the power of A/B testing, let’s take a look at a real-world example of how it can drive impactful user acquisition results.

Also Read: How to Diagnose and Solve Creative Fatigue in Your Mobile Game Ads

Case Study: A/B Testing of the Towerlands App Icon

Towerlands is a mobile strategy game with castle defense mechanics. In this game, players build and upgrade their towers to protect against waves of enemies. The game's core mechanics focus on strategic placement, upgrading defenses, and managing resources, all while battling.

The Challenge

The challenge was to determine how the app icon influences user acquisition. The app icon is one of the first elements potential players see and can significantly impact their decision to download the game. The developers needed to determine which icon design would generate the most clicks and drive the most installs. This led them to conduct an A/B test, comparing different icon designs to identify the most effective version.

Goal of the A/B Test

The goal is to identify the most effective app icon that will enhance the click-through rate (CTR) and conversions. The objective was to enhance the game's visibility on the App Store and encourage more users to install the game solely based on the app icon.

A/B Testing Process

Soft Launch: To gather initial user feedback, the game was soft-launched in Canada, Australia, Indonesia, and the Philippines. This allowed the team to test the app icon variations before launching it more broadly.

Testing Platform: Google’s internal testing platform for Android apps was used for the A/B testing process.

Icon Variations Tested

The following variations of the app icon were tested:

2D vs. 3D Battle Tower Icons: These two versions presented different artistic styles for the central tower, which is a key element in the game.

Yelling Guy Icon: Inspired by popular games like Clash of Clans and Game of War, this icon featured an aggressive character.

Character Defending the Tower: This icon showcased characters defending the tower, which was more aligned with the game’s core theme of castle defense.

Results

The “yelling guy” icon, despite being popular among competitors, did not perform well in terms of CTR or conversions.

The icon featuring characters defending the tower outperformed all other versions. This result demonstrates the effectiveness of aligning the app icon with the game's core theme. By visually representing the gameplay (tower defense), the app icon resonated more with the target audience, driving more installs.

To ensure the best results from your A/B testing efforts, it’s crucial to follow some key best practices.

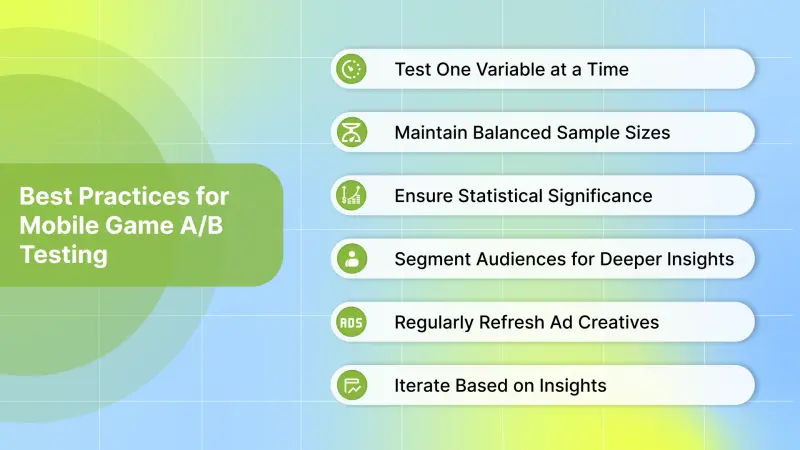

Best Practices for Mobile Game A/B Testing

To optimize your mobile game’s user acquisition efforts, it’s essential to follow best practices for A/B testing. These strategies ensure that your testing process is efficient, accurate, and leads to actionable insights. Here are some of the best practices to help you get the most out of your A/B testing campaigns:

Test One Variable at a Time: Focus on testing a single element (e.g., ad copy, visuals) to isolate its impact on performance.

Maintain Balanced Sample Sizes: Ensure equal distribution of users in test and control groups to avoid biased results.

Ensure Statistical Significance: Use statistical methods to confirm that results are meaningful and not due to random chance. Consider Bayesian A/B testing frameworks for continuous monitoring and stopping rules, using platforms like VWO to avoid false positives associated with repeated peeking.

Segment Audiences for Deeper Insights: Divide your audience based on demographics, device types, or behaviors to refine targeting and optimize results.

Regularly Refresh Ad Creatives to Combat Ad Fatigue: Continuously update your ad creatives to keep them engaging and avoid diminishing returns over time.

Iterate Based on Insights: Continuously refine and optimize based on the learnings from A/B testing to improve future campaigns.

Conclusion

A/B testing is essential for refining your mobile game's user acquisition strategy. By systematically testing variables such as ad copy, visuals, and calls to action, you can identify which elements resonate most with your audience, leading to improved conversion rates. This data-driven approach enables you to make informed decisions that enhance the effectiveness of your campaigns.

So, are you ready to take your user acquisition efforts to the next level? Start your 14-day free trial with Segwise today and unlock the full potential of your ad creatives.

FAQs

1. How do I choose the right creative format for my game?

Different game genres may benefit from specific ad formats. For example, hyper-casual games often perform well with playable ads, while narrative-driven games may benefit from cinematic video ads. Consider your game's unique features and target audience when selecting ad formats to ensure alignment with user expectations.

2. How can I determine if my ad creatives are experiencing fatigue?

Monitor key metrics such as CTR and conversion rates over time. A decline in these metrics may indicate creative fatigue, signaling the need for a refresh to maintain user engagement.

3. How can I scale successful ad creatives?

Once you've identified high-performing ad creatives, consider scaling them by increasing their reach across different platforms, expanding to new geographic regions, or targeting additional audience segments to maximize user acquisition.

Comments

Your comment has been submitted